MultiLLm ChatGPT

This AI module allows users to chat with different available models. It makes an API call to the /api/ai-modules/multillm-chat route in the same project. This API route gets the answer of the question based from the chosen model (gpt, llama 2, claude, mistral) and returns the response as a stream. The stream response is then displayed in the UI.

Additionally, you can connect Supabase and store the chat in the database if required.

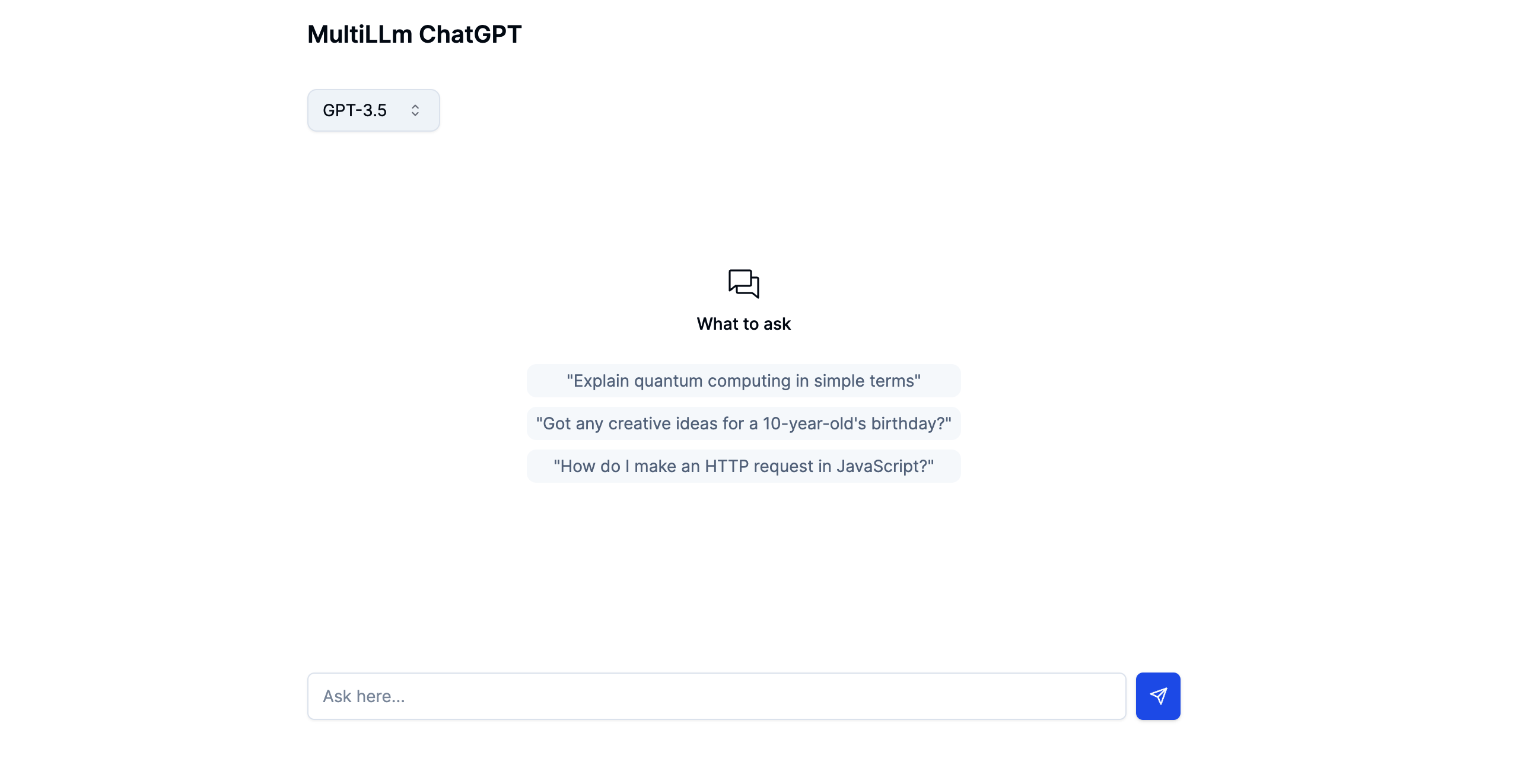

This is how it will look when you import and run the app:

How to use?

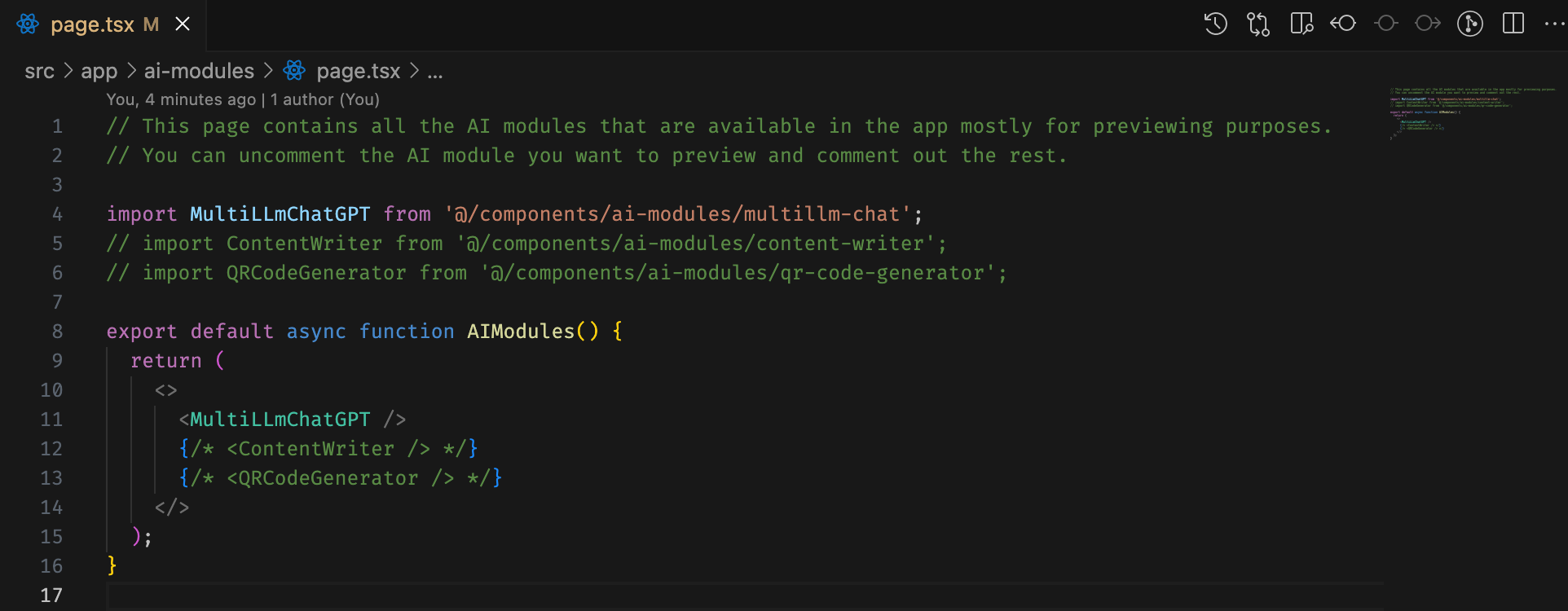

Simply import the MultiLLmChatGPT component where you want to use the MultiLLm ChatGPT module as shown below:

import MultiLLmChatGPT from '@/components/ai-modules/multillm-chat';

export default async function MultiLLmChatGPTPage() {

return <MultiLLmChatGPT />

}For testing, you can go to /src/app/ai-modules/page.tsx in the main branch and enable the MultiLLmChatGPT as shown below: